BibTex

@InProceedings{Zhu_2023_CVPR_tryondiffusion,

author={Zhu, Luyang and Yang, Dawei and Zhu, Tyler and Reda, Fitsum and Chan, William and Saharia, Chitwan and Norouzi, Mohammad and Kemelmacher-Shlizerman, Ira},

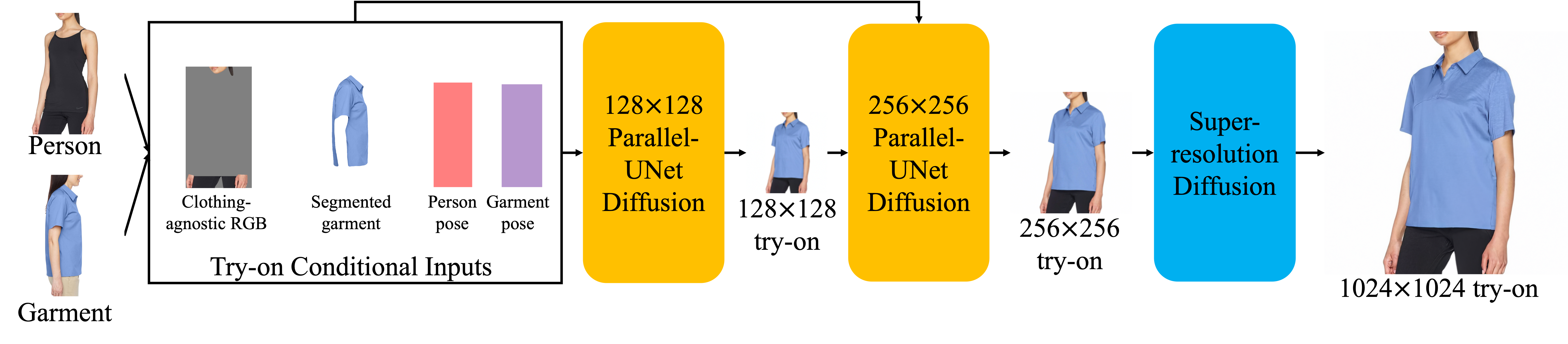

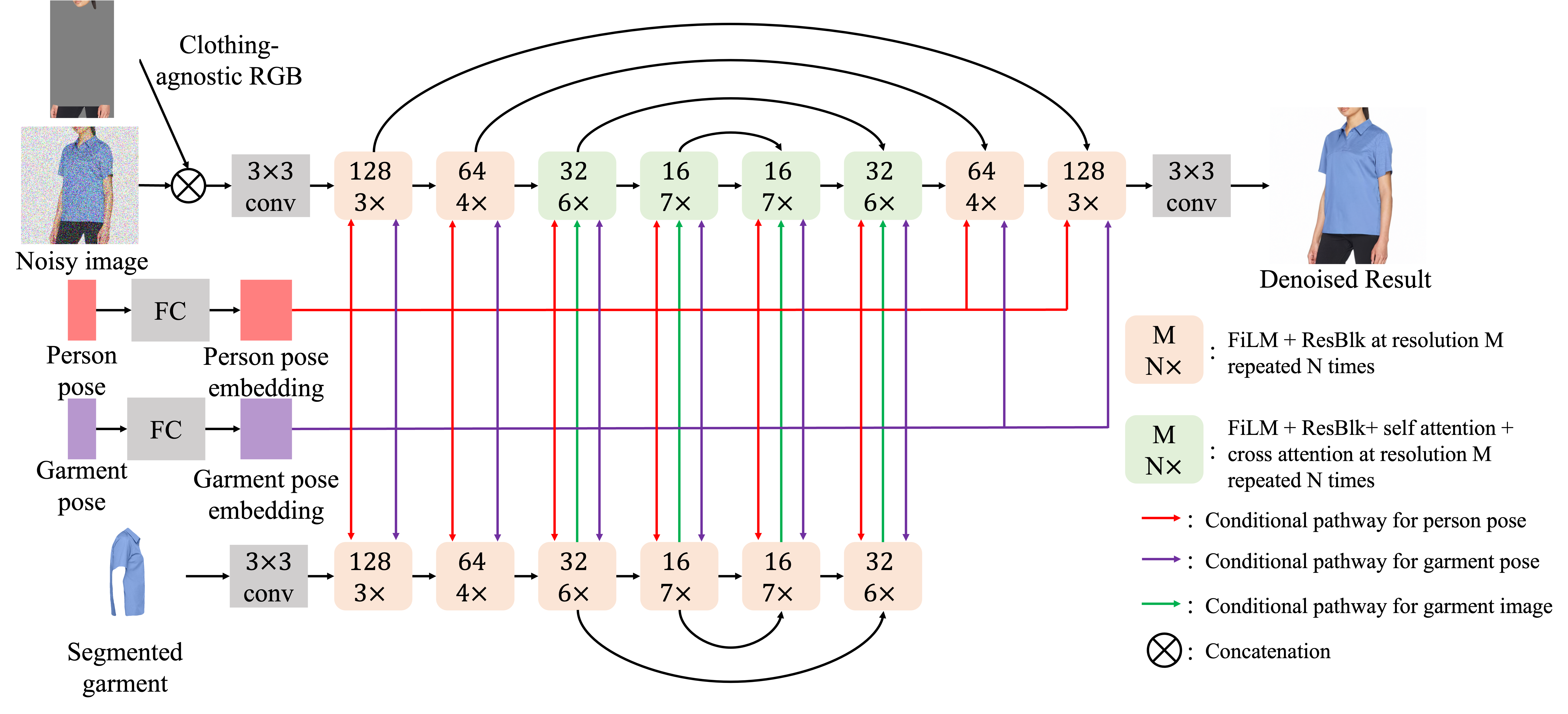

title={TryOnDiffusion: A Tale of Two UNets},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year={2023},

pages = {4606-4615}

}

Special Thanks

This work was done when all authors were at Google. Special Thank You to Yingwei Li, Hao Peng, Chris A. Lee, Alan Yang, Varsha Ramakrishnan, Srivatsan Varadharajan, David J. Fleet, and Daniel Watson for their insightful suggestions during the creation of this paper. We also are grateful for the kind support of the whole Google ARML Commerce organization.